mastering the curl command line with Daniel Stenberg

- 1. mastering the command line September 5, 2024 Daniel Stenberg

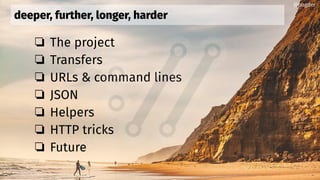

- 3. deeper, further, longer, harder ❏ The project ❏ Transfers ❏ URLs & command lines ❏ JSON ❏ Helpers ❏ HTTP tricks ❏ Future @bagder

- 6. November 1996: httpget August 1997: urlget March 1998: curl August 2000: libcurl @bagder

- 7. We never break existing functionality @bagder

- 8. @bagder curl runs in all your devices

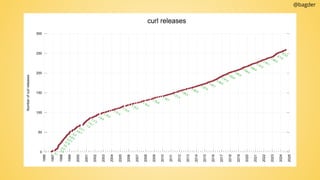

- 9. @bagder

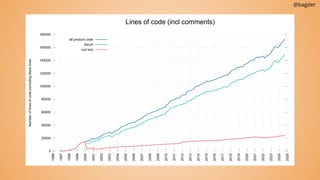

- 10. @bagder

- 11. @bagder

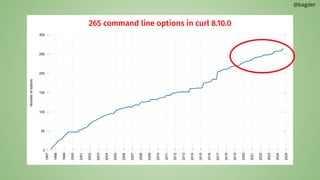

- 12. @bagder 265 command line options in curl 8.10.0

- 13. use a recent curl version

- 15. Parallel transfers by default, URLs are transferred serially, one by one -Z (--parallel) By default up to 50 simultaneous Change with --parallel‐max [num] Works for downloads and uploads @bagder server client Upload Download $ curl -Z -O https://example.com/[1-1000].jpg

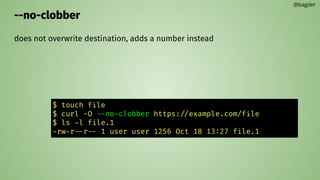

- 16. --no-clobber does not overwrite destination, adds a number instead @bagder $ touch file $ curl -O --no-clobber https://example.com/file $ ls -l file.1 -rw-r--r-- 1 user user 1256 Oct 18 13:27 file.1

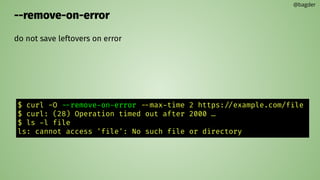

- 17. --remove-on-error do not save leftovers on error @bagder $ curl -O --remove-on-error --max-time 2 https://example.com/file $ curl: (28) Operation timed out after 2000 … $ ls -l file ls: cannot access 'file': No such file or directory

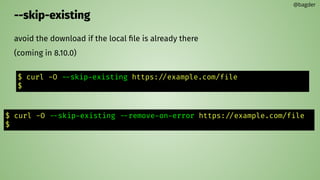

- 18. --skip-existing avoid the download if the local file is already there (coming in 8.10.0) @bagder $ curl -O --skip-existing https://example.com/file $ $ curl -O --skip-existing --remove-on-error https://example.com/file $

- 19. transfer controls stop slow transfers --speed‐limit <speed> --speed‐time <seconds> transfer rate limiting curl --limit-rate 100K https://example.com no more than this number of transfer starts per N time units curl --rate 2/s https://example.com/[1-20].jpg curl --rate 3/2h https://example.com/[1-20].html curl --rate 14/3m https://example.com/day/[1-365]/fun.html Truly limit the maximum file size accepted: curl --max-filesize 238M https://example.com/the-biggie -O @bagder

- 21. Massaging URL “queries” Queries are often name=value pairs separated by amperands (&) name=daniel & tool=curl & age=old Add query parts to the URL with --url-query [content] content is (for example) “name=value” “value” gets URL encoded to keep the URL fine @bagder scheme://user:password@host:1234/path?query#fragment

- 22. --url-query [content] curl --url-query “=hej()” https://example.com/ https://example.com/?hej%28%29 curl --url-query “name=hej()” https://example.com/?search=this https://example.com/?search=this&name=hej%28%29 curl --url-query “@file” https://example.com/ https://example.com/?[url encoded file content] curl --url-query “name@file” https://example.com/ https://example.com/?name=[url encoded file content] curl --url-query “+%20%20” https://example.com/ https://example.com/?%20%20 @bagder

- 23. config file “command lines in a file” one option (plus argument) per line $HOME/.curlrc is used by default -K [file] or --config [file] can be read from stdin can be generated (and huge) 10MB line length limit @bagder

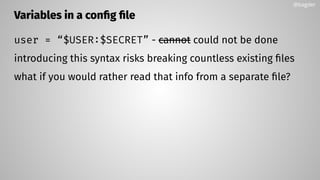

- 24. Variables in a config file user = “$USER:$SECRET” - cannot could not be done introducing this syntax risks breaking countless existing files what if you would rather read that info from a separate file? @bagder

- 25. --variable Sets a curl variable on command line or in a config file --variable name=content variable = name=content There can be an unlimited amount of variables. A variable can hold up to 10M of content Variables are set in a left to right order as it parses the command line or config file. @bagder

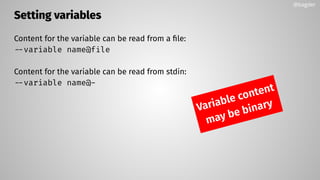

- 26. Setting variables Content for the variable can be read from a file: --variable name@file Content for the variable can be read from stdin: --variable name@- Variable content may be binary @bagder

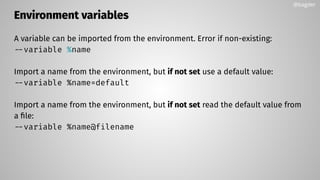

- 27. Environment variables A variable can be imported from the environment. Error if non-existing: --variable %name Import a name from the environment, but if not set use a default value: --variable %name=default Import a name from the environment, but if not set read the default value from a file: --variable %name@filename @bagder

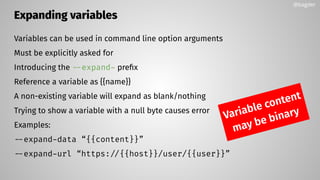

- 28. Expanding variables Variables can be used in command line option arguments Must be explicitly asked for Introducing the --expand- prefix Reference a variable as {{name}} A non-existing variable will expand as blank/nothing Trying to show a variable with a null byte causes error Examples: --expand-data “{{content}}” --expand-url “https://{{host}}/user/{{user}}” @bagder Variable content may be binary

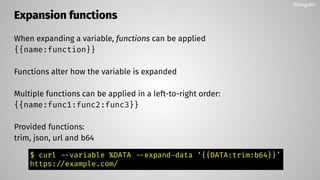

- 29. Expansion functions When expanding a variable, functions can be applied {{name:function}} Functions alter how the variable is expanded Multiple functions can be applied in a left-to-right order: {{name:func1:func2:func3}} Provided functions: trim, json, url and b64 @bagder $ curl --variable %DATA --expand-data ‘{{DATA:trim:b64}}’ https://example.com/

- 30. parses and manipulates URLs like ‘tr’ but for URLs companion tool to curl $ trurl --url https://curl.se --set host=example.com $ trurl --url https://curl.se --get ‘{host}’ $ trurl --url https://curl.se/we/are.html --redirect here.html $ trurl --url https://curl.se/we/../are.html --set port=8080 $ trurl --url "https://curl.se?name=hello" --append query=search=string $ trurl "https://fake.host/search?q=answers&user=me#frag" --json $ trurl "https://example.com?a=home&here=now&thisthen" -g '{query:a}' https://curl.se/trurl/ @bagder

- 31. wcurl is a command line tool which lets you download URLs without having to remember any parameters. Invoke wcurl with a list of URLs you want to download and wcurl picks sane defaults; using curl under the hood. $ wcurl https://curl.se/download/curl-8.9.1.tar.xz @bagder https://curl.se/wcurl/

- 32. JSON @bagder

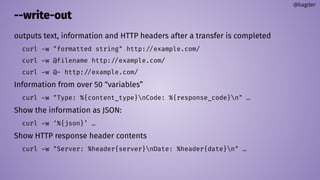

- 33. --write-out outputs text, information and HTTP headers after a transfer is completed curl -w "formatted string" http://example.com/ curl -w @filename http://example.com/ curl -w @- http://example.com/ Information from over 50 “variables” curl -w "Type: %{content_type}nCode: %{response_code}n" … Show the information as JSON: curl -w ‘%{json}’ … Show HTTP response header contents curl -w "Server: %header{server}nDate: %header{date}n" … @bagder

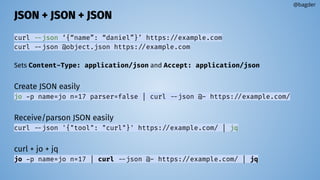

- 34. JSON + JSON + JSON curl --json ‘{“name”: “daniel”}’ https://example.com curl --json @object.json https://example.com Sets Content-Type: application/json and Accept: application/json Create JSON easily jo -p name=jo n=17 parser=false | curl --json @- https://example.com/ Receive/parson JSON easily curl --json '{"tool": "curl"}' https://example.com/ | jq curl + jo + jq jo -p name=jo n=17 | curl --json @- https://example.com/ | jq @bagder

- 35. Helpers @bagder

- 36. trace @bagder $ curl -d moo --trace - https://curl.se/ == Info: processing: https://curl.se/ == Info: Trying [2a04:4e42:e00::347]:443... == Info: Connected to curl.se (2a04:4e42:e00::347) port 443 == Info: ALPN: offers h2,http/1.1 => Send SSL data, 5 bytes (0x5) 0000: 16 03 01 02 00 ..... == Info: TLSv1.3 (OUT), TLS handshake, Client hello (1): => Send SSL data, 512 bytes (0x200) 0000: 01 00 01 fc 03 03 82 d6 6e 54 af be fa d7 91 c1 ........nT...... 0010: 92 0a 4e bf dc f7 39 a4 53 4d ee 22 18 bc c1 86 ..N...9.SM.".... 0020: 92 96 9a a1 73 88 20 ee 52 63 66 65 8d 06 45 df ....s. .Rcfe..E. … == Info: CAfile: /etc/ssl/certs/ca-certificates.crt == Info: CApath: /etc/ssl/certs <= Recv SSL data, 5 bytes (0x5) 0000: 16 03 03 00 7a ....z == Info: TLSv1.3 (IN), TLS handshake, Server hello (2): <= Recv SSL data, 122 bytes (0x7a) 0000: 02 00 00 76 03 03 59 27 14 18 87 2b ec 23 19 ab ...v..Y'...+.#.. 0010: 30 a0 f5 e0 97 30 89 27 44 85 c1 0a c9 d0 9d 74 0....0.'D......t … == Info: SSL connection using TLSv1.3 / TLS_AES_256_GCM_SHA384 == Info: ALPN: server accepted h2 == Info: Server certificate: == Info: subject: CN=curl.se == Info: start date: Jun 22 08:07:48 2023 GMT == Info: expire date: Sep 20 08:07:47 2023 GMT == Info: subjectAltName: host "curl.se" matched cert's "curl.se" == Info: issuer: C=US; O=Let's Encrypt; CN=R3 == Info: SSL certificate verify ok. … == Info: using HTTP/2 == Info: h2 [:method: POST] == Info: h2 [:scheme: https] == Info: h2 [:authority: curl.se] == Info: h2 [:path: /] == Info: h2 [user-agent: curl/8.2.1] == Info: h2 [accept: */*] == Info: h2 [content-length: 3] == Info: h2 [content-type: application/x-www-form-urlencoded] == Info: Using Stream ID: 1 => Send SSL data, 5 bytes (0x5) 0000: 17 03 03 00 5d ....] => Send SSL data, 1 bytes (0x1) 0000: 17 . => Send header, 137 bytes (0x89) 0000: 50 4f 53 54 20 2f 20 48 54 54 50 2f 32 0d 0a 48 POST / HTTP/2..H 0010: 6f 73 74 3a 20 63 75 72 6c 2e 73 65 0d 0a 55 73 ost: curl.se..Us 0020: 65 72 2d 41 67 65 6e 74 3a 20 63 75 72 6c 2f 38 er-Agent: curl/8 0030: 2e 32 2e 31 0d 0a 41 63 63 65 70 74 3a 20 2a 2f .2.1..Accept: */ 0040: 2a 0d 0a 43 6f 6e 74 65 6e 74 2d 4c 65 6e 67 74 *..Content-Lengt 0050: 68 3a 20 33 0d 0a 43 6f 6e 74 65 6e 74 2d 54 79 h: 3..Content-Ty 0060: 70 65 3a 20 61 70 70 6c 69 63 61 74 69 6f 6e 2f pe: application/ 0070: 78 2d 77 77 77 2d 66 6f 72 6d 2d 75 72 6c 65 6e x-www-form-urlen 0080: 63 6f 64 65 64 0d 0a 0d 0a coded.... => Send data, 3 bytes (0x3) 0000: 6d 6f 6f moo … <= Recv header, 13 bytes (0xd) 0000: 48 54 54 50 2f 32 20 32 30 30 20 0d 0a HTTP/2 200 .. <= Recv header, 22 bytes (0x16) 0000: 73 65 72 76 65 72 3a 20 6e 67 69 6e 78 2f 31 2e server: nginx/1. 0010: 32 31 2e 31 0d 0a 21.1.. <= Recv header, 25 bytes (0x19) 0000: 63 6f 6e 74 65 6e 74 2d 74 79 70 65 3a 20 74 65 content-type: te 0010: 78 74 2f 68 74 6d 6c 0d 0a xt/html.. <= Recv header, 29 bytes (0x1d) 0000: 78 2d 66 72 61 6d 65 2d 6f 70 74 69 6f 6e 73 3a x-frame-options: 0010: 20 53 41 4d 45 4f 52 49 47 49 4e 0d 0a SAMEORIGIN.. <= Recv header, 46 bytes (0x2e) 0000: 6c 61 73 74 2d 6d 6f 64 69 66 69 65 64 3a 20 54 last-modified: T 0010: 68 75 2c 20 31 30 20 41 75 67 20 32 30 32 33 20 hu, 10 Aug 2023 0020: 30 32 3a 30 35 3a 30 33 20 47 4d 54 0d 0a 02:05:03 GMT.. <= Recv header, 28 bytes (0x1c) 0000: 65 74 61 67 3a 20 22 32 30 64 39 2d 36 30 32 38 etag: "20d9-6028 0010: 38 30 36 33 33 31 35 34 64 22 0d 0a 80633154d".. <= Recv header, 22 bytes (0x16) 0000: 61 63 63 65 70 74 2d 72 61 6e 67 65 73 3a 20 62 accept-ranges: b 0010: 79 74 65 73 0d 0a ytes.. <= Recv header, 27 bytes (0x1b) 0000: 63 61 63 68 65 2d 63 6f 6e 74 72 6f 6c 3a 20 6d cache-control: m 0010: 61 78 2d 61 67 65 3d 36 30 0d 0a ax-age=60.. <= Recv header, 40 bytes (0x28) 0000: 65 78 70 69 72 65 73 3a 20 54 68 75 2c 20 31 30 expires: Thu, 10 0010: 20 41 75 67 20 32 30 32 33 20 30 37 3a 30 36 3a Aug 2023 07:06: 0020: 31 34 20 47 4d 54 0d 0a 14 GMT.. … … <= Recv data, 867 bytes (0x363) 0000: 3c 21 44 4f 43 54 59 50 45 20 48 54 4d 4c 20 50 <!DOCTYPE HTML P 0010: 55 42 4c 49 43 20 22 2d 2f 2f 57 33 43 2f 2f 44 UBLIC "-//W3C//D 0020: 54 44 20 48 54 4d 4c 20 34 2e 30 31 20 54 72 61 TD HTML 4.01 Tra 0030: 6e 73 69 74 69 6f 6e 61 6c 2f 2f 45 4e 22 20 22 nsitional//EN" " 0040: 68 74 74 70 3a 2f 2f 77 77 77 2e 77 33 2e 6f 72 http://www.w3.or 0050: 67 2f 54 52 2f 68 74 6d 6c 34 2f 6c 6f 6f 73 65 g/TR/html4/loose 0060: 2e 64 74 64 22 3e 0a 3c 68 74 6d 6c 20 6c 61 6e .dtd">.<html lan 0070: 67 3d 22 65 6e 22 3e 0a 3c 68 65 61 64 3e 0a 3c g="en">.<head>.< 0080: 74 69 74 6c 65 3e 63 75 72 6c 3c 2f 74 69 74 6c title>curl</titl 0090: 65 3e 0a 3c 6d 65 74 61 20 6e 61 6d 65 3d 22 76 e>.<meta name="v 00a0: 69 65 77 70 6f 72 74 22 20 63 6f 6e 74 65 6e 74 iewport" content 00b0: 3d 22 77 69 64 74 68 3d 64 65 76 69 63 65 2d 77 ="width=device-w 00c0: 69 64 74 68 2c 20 69 6e 69 74 69 61 6c 2d 73 63 idth, initial-sc 00d0: 61 6c 65 3d 31 2e 30 22 3e 0a 3c 6d 65 74 61 20 ale=1.0">.<meta 00e0: 63 6f 6e 74 65 6e 74 3d 22 74 65 78 74 2f 68 74 content="text/ht 00f0: 6d 6c 3b 20 63 68 61 72 73 65 74 3d 55 54 46 2d ml; charset=UTF- 0100: 38 22 20 68 74 74 70 2d 65 71 75 69 76 3d 22 43 8" http-equiv="C 0110: 6f 6e 74 65 6e 74 2d 54 79 70 65 22 3e 0a 3c 6c ontent-Type">.<l 0120: 69 6e 6b 20 72 65 6c 3d 22 73 74 79 6c 65 73 68 ink rel="stylesh curl -d moo --trace - https://curl.se/

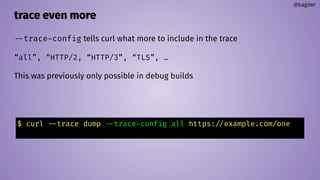

- 37. trace even more @bagder --trace-config tells curl what more to include in the trace “all”, “HTTP/2, “HTTP/3”, “TLS”, … This was previously only possible in debug builds $ curl --trace dump --trace-config all https://example.com/one

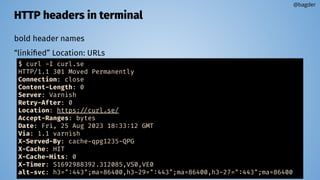

- 38. HTTP headers in terminal bold header names “linkified” Location: URLs @bagder $ curl -I curl.se HTTP/1.1 301 Moved Permanently Connection: close Content-Length: 0 Server: Varnish Retry-After: 0 Location: https://curl.se/ Accept-Ranges: bytes Date: Fri, 25 Aug 2023 18:33:12 GMT Via: 1.1 varnish X-Served-By: cache-qpg1235-QPG X-Cache: HIT X-Cache-Hits: 0 X-Timer: S1692988392.312085,VS0,VE0 alt-svc: h3=":443";ma=86400,h3-29=":443";ma=86400,h3-27=":443";ma=86400

- 39. Better option help Coming in 8.10.0 curl -h [option] @bagder $ curl -h --fail-early --fail-early Fail and exit on the first detected transfer error. When curl is used to do multiple transfers on the command line, it attempts to operate on each given URL, one by one. By default, it ignores errors if there are more URLs given and the last URL's success determines the error code curl returns. Early failures are "hidden" by subsequent successful transfers. Using this option, curl instead returns an error on the first transfer that fails, independent of the amount of URLs that are given on the command line. This way, no transfer failures go undetected by scripts and similar. This option does not imply --fail, which causes transfers to fail due to the server's HTTP status code. You can combine the two options, however note --fail is not global and is therefore contained by --next. This option is global and does not need to be specified for each use of --next. Providing --fail-early multiple times has no extra effect. Disable it again with --no-fail-early. Example: curl --fail-early https://example.com https://two.example See also --fail and --fail-with-body.

- 41. etags do the transfer only if… The remote resource is “different” curl --etag-save remember.txt -O https://example.com/file curl --etag-compare remember.txt -O https://example.com/file Both can be used at once for convenient updates: curl --etag-save remember.txt --etag-compare remember.txt -O https://example.com/file Better than time conditions @bagder

- 42. HTTP alt-svc server tells client: there is one or more alternatives at "another place" The Alt-Svc: response header Each entry has an expiry time Only recognized over HTTPS curl --alt-svc altcache.txt https://example.com/ The alt-svc cache is a text based readable file @bagder

- 43. HTTP HSTS HSTS - HTTP Strict Transport Security This host name requires HTTPS going forward The Strict-Transport-Security: response header Only recognized over HTTPS Each entry has an expiry time curl --hsts hsts.txt http://example.com/ The HSTS cache is a text based readable file @bagder

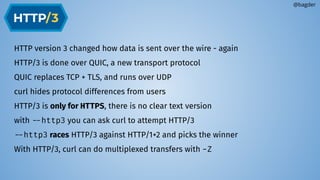

- 44. HTTP version 3 changed how data is sent over the wire - again HTTP/3 is done over QUIC, a new transport protocol QUIC replaces TCP + TLS, and runs over UDP curl hides protocol differences from users HTTP/3 is only for HTTPS, there is no clear text version with --http3 you can ask curl to attempt HTTP/3 --http3 races HTTP/3 against HTTP/1+2 and picks the winner With HTTP/3, curl can do multiplexed transfers with -Z @bagder

- 45. connection race curl uses both IPv6 and IPv4 when possible - and races them against each other “Happy eyeballs” Restrict to a fixed IP version with --ipv4 or --ipv6 @bagder curl.se client curl.se has address 151.101.129.91 curl.se has address 151.101.193.91 curl.se has address 151.101.1.91 curl.se has address 151.101.65.91 curl.se has IPv6 address 2a04:4e42:800::347 curl.se has IPv6 address 2a04:4e42:a00::347 curl.se has IPv6 address 2a04:4e42:c00::347 curl.se has IPv6 address 2a04:4e42:e00::347 curl.se has IPv6 address 2a04:4e42::347 curl.se has IPv6 address 2a04:4e42:200::347 curl.se has IPv6 address 2a04:4e42:400::347 curl.se has IPv6 address 2a04:4e42:600::347 DNS 2a04:4e42:800::347 2a04:4e42:a00::347 2a04:4e42:c00::347 2a04:4e42:e00::347 2a04:4e42::347 2a04:4e42:200::347 2a04:4e42:400::347 2a04:4e42:600::347 151.101.129.91 151.101.193.91 151.101.1.91 151.101.65.91 IPv6 IPv4

- 46. @bagder racing curl.se client curl.se has address 151.101.129.91 curl.se has address 151.101.193.91 curl.se has address 151.101.1.91 curl.se has address 151.101.65.91 curl.se has IPv6 address 2a04:4e42:800::347 curl.se has IPv6 address 2a04:4e42:a00::347 curl.se has IPv6 address 2a04:4e42:c00::347 curl.se has IPv6 address 2a04:4e42:e00::347 curl.se has IPv6 address 2a04:4e42::347 curl.se has IPv6 address 2a04:4e42:200::347 curl.se has IPv6 address 2a04:4e42:400::347 curl.se has IPv6 address 2a04:4e42:600::347 DNS 2a04:4e42:800::347 2a04:4e42:a00::347 2a04:4e42:c00::347 2a04:4e42:e00::347 2a04:4e42::347 2a04:4e42:200::347 2a04:4e42:400::347 2a04:4e42:600::347 151.101.129.91 151.101.193.91 151.101.1.91 151.101.65.91 h2 IPv6 h2 IPv4 h3 IPv4 h3 IPv6

- 47. Future @bagder

- 49. Going next? the Internet does not stop or slow down protocols and new ways of doing Internet transfers keep popping up curl will keep expanding, get new features, get taught new things we, the community, make curl do what we think it should do you can affect what’s next for curl @bagder

![Parallel transfers

by default, URLs are transferred serially, one by one

-Z (--parallel)

By default up to 50 simultaneous

Change with --parallel‐max [num]

Works for downloads and uploads

@bagder

server

client

Upload

Download

$ curl -Z -O https://example.com/[1-1000].jpg](https://image.slidesharecdn.com/masteringthecurlcommandline-1-240905211840-3196975b/85/mastering-the-curl-command-line-with-Daniel-Stenberg-15-320.jpg)

![transfer controls

stop slow transfers

--speed‐limit <speed> --speed‐time <seconds>

transfer rate limiting

curl --limit-rate 100K https://example.com

no more than this number of transfer starts per N time units

curl --rate 2/s https://example.com/[1-20].jpg

curl --rate 3/2h https://example.com/[1-20].html

curl --rate 14/3m https://example.com/day/[1-365]/fun.html

Truly limit the maximum file size accepted:

curl --max-filesize 238M https://example.com/the-biggie -O

@bagder](https://image.slidesharecdn.com/masteringthecurlcommandline-1-240905211840-3196975b/85/mastering-the-curl-command-line-with-Daniel-Stenberg-19-320.jpg)

![Massaging URL “queries”

Queries are often name=value pairs separated by amperands (&)

name=daniel & tool=curl & age=old

Add query parts to the URL with --url-query [content]

content is (for example) “name=value”

“value” gets URL encoded to keep the URL fine

@bagder

scheme://user:password@host:1234/path?query#fragment](https://image.slidesharecdn.com/masteringthecurlcommandline-1-240905211840-3196975b/85/mastering-the-curl-command-line-with-Daniel-Stenberg-21-320.jpg)

![--url-query [content]

curl --url-query “=hej()” https://example.com/

https://example.com/?hej%28%29

curl --url-query “name=hej()” https://example.com/?search=this

https://example.com/?search=this&name=hej%28%29

curl --url-query “@file” https://example.com/

https://example.com/?[url encoded file content]

curl --url-query “name@file” https://example.com/

https://example.com/?name=[url encoded file content]

curl --url-query “+%20%20” https://example.com/

https://example.com/?%20%20

@bagder](https://image.slidesharecdn.com/masteringthecurlcommandline-1-240905211840-3196975b/85/mastering-the-curl-command-line-with-Daniel-Stenberg-22-320.jpg)

![config file

“command lines in a file”

one option (plus argument) per line

$HOME/.curlrc is used by default

-K [file] or --config [file]

can be read from stdin

can be generated (and huge)

10MB line length limit

@bagder](https://image.slidesharecdn.com/masteringthecurlcommandline-1-240905211840-3196975b/85/mastering-the-curl-command-line-with-Daniel-Stenberg-23-320.jpg)

![trace

@bagder

$ curl -d moo --trace - https://curl.se/

== Info: processing: https://curl.se/

== Info: Trying [2a04:4e42:e00::347]:443...

== Info: Connected to curl.se (2a04:4e42:e00::347) port 443

== Info: ALPN: offers h2,http/1.1

=> Send SSL data, 5 bytes (0x5)

0000: 16 03 01 02 00 .....

== Info: TLSv1.3 (OUT), TLS handshake, Client hello (1):

=> Send SSL data, 512 bytes (0x200)

0000: 01 00 01 fc 03 03 82 d6 6e 54 af be fa d7 91 c1 ........nT......

0010: 92 0a 4e bf dc f7 39 a4 53 4d ee 22 18 bc c1 86 ..N...9.SM."....

0020: 92 96 9a a1 73 88 20 ee 52 63 66 65 8d 06 45 df ....s. .Rcfe..E.

…

== Info: CAfile: /etc/ssl/certs/ca-certificates.crt

== Info: CApath: /etc/ssl/certs

<= Recv SSL data, 5 bytes (0x5)

0000: 16 03 03 00 7a ....z

== Info: TLSv1.3 (IN), TLS handshake, Server hello (2):

<= Recv SSL data, 122 bytes (0x7a)

0000: 02 00 00 76 03 03 59 27 14 18 87 2b ec 23 19 ab ...v..Y'...+.#..

0010: 30 a0 f5 e0 97 30 89 27 44 85 c1 0a c9 d0 9d 74 0....0.'D......t

…

== Info: SSL connection using TLSv1.3 / TLS_AES_256_GCM_SHA384

== Info: ALPN: server accepted h2

== Info: Server certificate:

== Info: subject: CN=curl.se

== Info: start date: Jun 22 08:07:48 2023 GMT

== Info: expire date: Sep 20 08:07:47 2023 GMT

== Info: subjectAltName: host "curl.se" matched cert's "curl.se"

== Info: issuer: C=US; O=Let's Encrypt; CN=R3

== Info: SSL certificate verify ok.

…

== Info: using HTTP/2

== Info: h2 [:method: POST]

== Info: h2 [:scheme: https]

== Info: h2 [:authority: curl.se]

== Info: h2 [:path: /]

== Info: h2 [user-agent: curl/8.2.1]

== Info: h2 [accept: */*]

== Info: h2 [content-length: 3]

== Info: h2 [content-type: application/x-www-form-urlencoded]

== Info: Using Stream ID: 1

=> Send SSL data, 5 bytes (0x5)

0000: 17 03 03 00 5d ....]

=> Send SSL data, 1 bytes (0x1)

0000: 17 .

=> Send header, 137 bytes (0x89)

0000: 50 4f 53 54 20 2f 20 48 54 54 50 2f 32 0d 0a 48 POST / HTTP/2..H

0010: 6f 73 74 3a 20 63 75 72 6c 2e 73 65 0d 0a 55 73 ost: curl.se..Us

0020: 65 72 2d 41 67 65 6e 74 3a 20 63 75 72 6c 2f 38 er-Agent: curl/8

0030: 2e 32 2e 31 0d 0a 41 63 63 65 70 74 3a 20 2a 2f .2.1..Accept: */

0040: 2a 0d 0a 43 6f 6e 74 65 6e 74 2d 4c 65 6e 67 74 *..Content-Lengt

0050: 68 3a 20 33 0d 0a 43 6f 6e 74 65 6e 74 2d 54 79 h: 3..Content-Ty

0060: 70 65 3a 20 61 70 70 6c 69 63 61 74 69 6f 6e 2f pe: application/

0070: 78 2d 77 77 77 2d 66 6f 72 6d 2d 75 72 6c 65 6e x-www-form-urlen

0080: 63 6f 64 65 64 0d 0a 0d 0a coded....

=> Send data, 3 bytes (0x3)

0000: 6d 6f 6f moo

…

<= Recv header, 13 bytes (0xd)

0000: 48 54 54 50 2f 32 20 32 30 30 20 0d 0a HTTP/2 200 ..

<= Recv header, 22 bytes (0x16)

0000: 73 65 72 76 65 72 3a 20 6e 67 69 6e 78 2f 31 2e server: nginx/1.

0010: 32 31 2e 31 0d 0a 21.1..

<= Recv header, 25 bytes (0x19)

0000: 63 6f 6e 74 65 6e 74 2d 74 79 70 65 3a 20 74 65 content-type: te

0010: 78 74 2f 68 74 6d 6c 0d 0a xt/html..

<= Recv header, 29 bytes (0x1d)

0000: 78 2d 66 72 61 6d 65 2d 6f 70 74 69 6f 6e 73 3a x-frame-options:

0010: 20 53 41 4d 45 4f 52 49 47 49 4e 0d 0a SAMEORIGIN..

<= Recv header, 46 bytes (0x2e)

0000: 6c 61 73 74 2d 6d 6f 64 69 66 69 65 64 3a 20 54 last-modified: T

0010: 68 75 2c 20 31 30 20 41 75 67 20 32 30 32 33 20 hu, 10 Aug 2023

0020: 30 32 3a 30 35 3a 30 33 20 47 4d 54 0d 0a 02:05:03 GMT..

<= Recv header, 28 bytes (0x1c)

0000: 65 74 61 67 3a 20 22 32 30 64 39 2d 36 30 32 38 etag: "20d9-6028

0010: 38 30 36 33 33 31 35 34 64 22 0d 0a 80633154d"..

<= Recv header, 22 bytes (0x16)

0000: 61 63 63 65 70 74 2d 72 61 6e 67 65 73 3a 20 62 accept-ranges: b

0010: 79 74 65 73 0d 0a ytes..

<= Recv header, 27 bytes (0x1b)

0000: 63 61 63 68 65 2d 63 6f 6e 74 72 6f 6c 3a 20 6d cache-control: m

0010: 61 78 2d 61 67 65 3d 36 30 0d 0a ax-age=60..

<= Recv header, 40 bytes (0x28)

0000: 65 78 70 69 72 65 73 3a 20 54 68 75 2c 20 31 30 expires: Thu, 10

0010: 20 41 75 67 20 32 30 32 33 20 30 37 3a 30 36 3a Aug 2023 07:06:

0020: 31 34 20 47 4d 54 0d 0a 14 GMT..

…

…

<= Recv data, 867 bytes (0x363)

0000: 3c 21 44 4f 43 54 59 50 45 20 48 54 4d 4c 20 50 <!DOCTYPE HTML P

0010: 55 42 4c 49 43 20 22 2d 2f 2f 57 33 43 2f 2f 44 UBLIC "-//W3C//D

0020: 54 44 20 48 54 4d 4c 20 34 2e 30 31 20 54 72 61 TD HTML 4.01 Tra

0030: 6e 73 69 74 69 6f 6e 61 6c 2f 2f 45 4e 22 20 22 nsitional//EN" "

0040: 68 74 74 70 3a 2f 2f 77 77 77 2e 77 33 2e 6f 72 http://www.w3.or

0050: 67 2f 54 52 2f 68 74 6d 6c 34 2f 6c 6f 6f 73 65 g/TR/html4/loose

0060: 2e 64 74 64 22 3e 0a 3c 68 74 6d 6c 20 6c 61 6e .dtd">.<html lan

0070: 67 3d 22 65 6e 22 3e 0a 3c 68 65 61 64 3e 0a 3c g="en">.<head>.<

0080: 74 69 74 6c 65 3e 63 75 72 6c 3c 2f 74 69 74 6c title>curl</titl

0090: 65 3e 0a 3c 6d 65 74 61 20 6e 61 6d 65 3d 22 76 e>.<meta name="v

00a0: 69 65 77 70 6f 72 74 22 20 63 6f 6e 74 65 6e 74 iewport" content

00b0: 3d 22 77 69 64 74 68 3d 64 65 76 69 63 65 2d 77 ="width=device-w

00c0: 69 64 74 68 2c 20 69 6e 69 74 69 61 6c 2d 73 63 idth, initial-sc

00d0: 61 6c 65 3d 31 2e 30 22 3e 0a 3c 6d 65 74 61 20 ale=1.0">.<meta

00e0: 63 6f 6e 74 65 6e 74 3d 22 74 65 78 74 2f 68 74 content="text/ht

00f0: 6d 6c 3b 20 63 68 61 72 73 65 74 3d 55 54 46 2d ml; charset=UTF-

0100: 38 22 20 68 74 74 70 2d 65 71 75 69 76 3d 22 43 8" http-equiv="C

0110: 6f 6e 74 65 6e 74 2d 54 79 70 65 22 3e 0a 3c 6c ontent-Type">.<l

0120: 69 6e 6b 20 72 65 6c 3d 22 73 74 79 6c 65 73 68 ink rel="stylesh

curl -d moo --trace - https://curl.se/](https://image.slidesharecdn.com/masteringthecurlcommandline-1-240905211840-3196975b/85/mastering-the-curl-command-line-with-Daniel-Stenberg-36-320.jpg)

![Better option help

Coming in 8.10.0

curl -h [option]

@bagder

$ curl -h --fail-early

--fail-early

Fail and exit on the first detected transfer error.

When curl is used to do multiple transfers on the command line, it

attempts to operate on each given URL, one by one. By default, it

ignores errors if there are more URLs given and the last URL's

success determines the error code curl returns. Early failures are

"hidden" by subsequent successful transfers.

Using this option, curl instead returns an error on the first

transfer that fails, independent of the amount of URLs that are

given on the command line. This way, no transfer failures go

undetected by scripts and similar.

This option does not imply --fail, which causes transfers to fail

due to the server's HTTP status code. You can combine the two

options, however note --fail is not global and is therefore

contained by --next.

This option is global and does not need to be specified for each

use of --next. Providing --fail-early multiple times has no extra

effect. Disable it again with --no-fail-early.

Example:

curl --fail-early https://example.com https://two.example

See also --fail and --fail-with-body.](https://image.slidesharecdn.com/masteringthecurlcommandline-1-240905211840-3196975b/85/mastering-the-curl-command-line-with-Daniel-Stenberg-39-320.jpg)