The different types of machine learning explained

Rigorous experimentation is key to building machine learning models. Learn about the main types of ML models and the many factors that go into training the right one for the task.

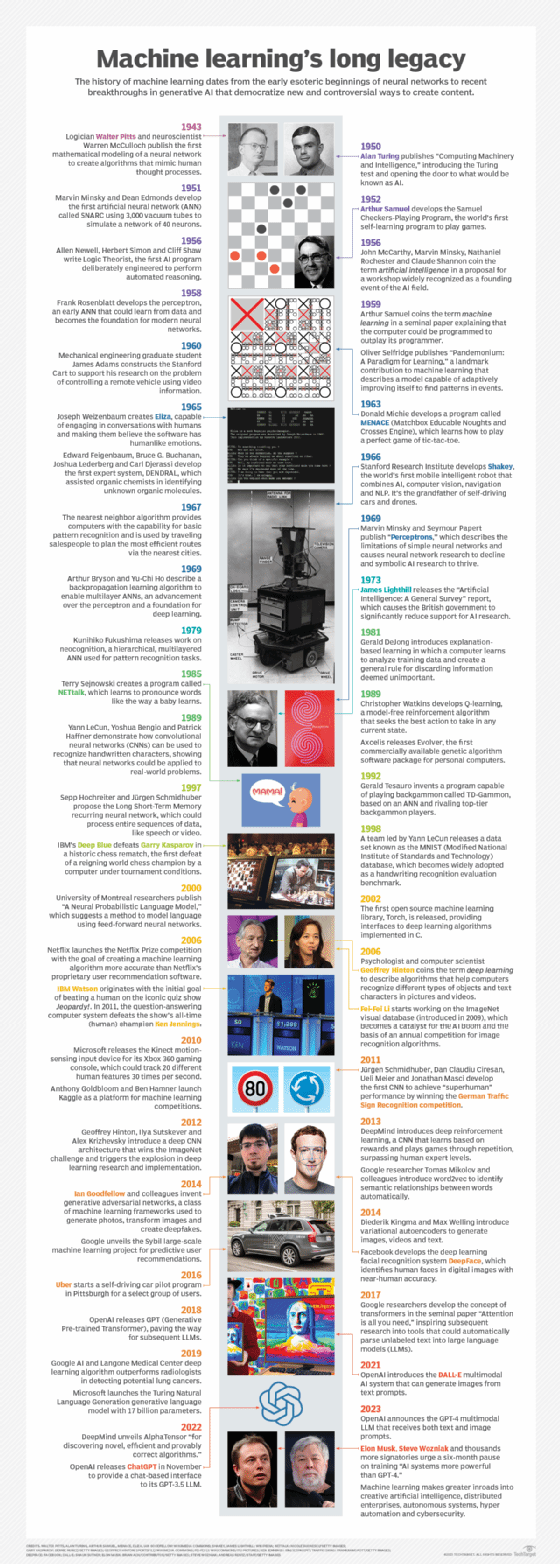

Machine learning is a broad field that uses automated training techniques to discover better algorithms. The term was coined by Arthur Samuel at IBM in 1959. A subset of AI, machine learning encompasses many techniques. An example is deep learning, an approach which relies on artificial neural networks to learn. There are many other kinds of machine learning techniques commonly used in practice, including some that are used to train deep learning algorithms.

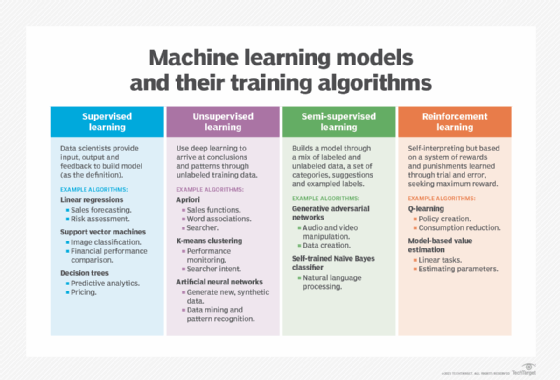

Practitioners often choose from four main types of machine learning models based on their respective suitability to the way the data is prepared.

- Supervised learning models consume data that has been prelabeled by humans.

- Unsupervised learning models discover patterns in data that hasn't been previously labeled.

- Semi-supervised learning models involve an iterative process that works with both labeled and unlabeled data.

- Reinforcement learning models use algorithms that can tune models in response to feedback about performance after deployment.

Choosing the right machine learning model type

Selecting the type of machine learning model is a mix of art and science. It's important to use an experimental and iterative process to determine the most valuable approach in terms of performance, accuracy, reliability and explainability. Each model type has its strengths and weaknesses. The right choice will depend on factors such as the provenance of your data and the class of algorithms suited to the problem you're looking to solve. Machine learning practitioners are likely to combine multiple machine learning types and various algorithms within those types to achieve the best outcome.

Data scientists, for example, might analyze a data set using unsupervised techniques to achieve a basic understanding of relationships within a data set -- for example, how the sale of a product correlates with its position on a store's shelf. Once that relationship is confirmed, practitioners might use supervised techniques with labels that describe a product's shelf location. Semi-supervised techniques could automatically compute shelf location labels. After the machine learning model is deployed, reinforcement learning could fine-tune the model's predictions based on actual sales.

This article is part of

What is machine learning? Guide, definition and examples

A deep understanding of the data is essential because it serves as a project's blueprint, said David Guarrera, EY America's generative AI leader. The performance of a new machine learning model depends on the nature of the data, the specific problem and what's required to solve it.

Neural networks, for example, might be best for image recognition tasks, while decision trees could be more suitable for a different type of classification problem. "It's often about finding the right tool for the right job in the context of machine learning and about fitting to the budget and computational constraints of the project," Guarrera explained.

Here's a deeper look at the four main types of machine learning models.

1. Supervised learning model

Supervised learning models work with data that has been previously labeled. The downside is that someone or some process needs to apply these labels. Applying labels after the fact requires a lot of time and effort. In some cases, these labels can be generated automatically as part of an automation process, such as capturing the location of products in a store. Classification and regression are the most common types of supervised learning algorithms.

- Classification algorithms decide the category of an entity, object or event as represented in the data. The simplest classification algorithms answer binary questions such as yes/no, sales/not-sales or cat/not-cat. More complicated algorithms lump things into multiple categories like cat, dog or mouse. Popular classification algorithms include decision trees, logistic regression, random forest and support vector machines.

- Regression algorithms identify relationships within multiple variables represented in a data set. This approach is useful when analyzing how a specific variable such as product sales correlates with changing variables like price, temperature, day of week or shelf location. Popular regression algorithms include linear regression, multivariate regression, decision tree and least absolute shrinkage and selection operator (lasso) regression.

Common use cases are classifying images of objects into categories, predicting sales trends, categorizing loan applications and applying predictive maintenance to estimate failure rates.

2. Unsupervised learning model

Unsupervised learning models automate the process of discerning patterns present within a data set. These patterns are particularly helpful in exploratory data analysis to determine the best way to frame a data science problem. Clustering and dimensional reduction are two common unsupervised learning algorithmic types.

- Clustering algorithms help group similar sets of data together based on various criteria. Practitioners can segment data into different groups to identify patterns within each group.

- Dimension reduction algorithms explore ways to compact multiple variables efficiently for a specific problem.

These algorithms include approaches to feature selection and projection. Feature selection helps prioritize characteristics that are more relevant to a given question. Feature projection explores ways to find deeper relationships among multiple variables that can be quantified into new intermediate variables that are more appropriate for the problem at hand.

Common clustering and dimension reduction use cases include grouping inventory based on sales data, associating sales data with a product's store shelf location, categorizing customer personas and identifying features in images.

3. Semi-supervised learning model

Semi-supervised learning models characterize processes that use unsupervised learning algorithms to automatically generate labels for data that can be consumed by supervised techniques. Several approaches can be used to apply these labels, including the following:

- Clustering techniques label data that looks similar to labels generated by humans.

- Self-supervised learning techniques train algorithms to solve a pretext task that correctly applies labels.

- Multi-instance techniques find ways to generate labels for a collection of examples with specific characteristics.

4. Reinforcement learning model

Reinforcement learning models are often used to improve models after they have been deployed. They can also be used in an interactive training process, such as teaching an algorithm to play games in response to feedback about individual moves or to determine wins and losses in a round of games like chess or Go.

The core technique requires establishing a set of actions, parameters and end values that are tuned through trial and error. At each step, the algorithm makes a prediction, move or decision. The result is compared to results in a game or real-world scenario. A penalty or reward is sent back to refine the algorithm over time.

The most common reinforcement learning algorithms use various neural networks. In self-driving applications, for example, an algorithm's training might be based on how it responds to data recorded from cars or synthetic data that represents what the car's sensors might see at night.

Machine learning model vs. machine learning algorithm

The terms machine learning model and machine learning algorithm are sometimes conflated to mean the same thing. But from a data science perspective, they're very different. Machine learning algorithms are used in training machine learning models.

Machine learning algorithms are the brains of the models, explained Brian Steele, AI strategy consultant at Curate Partners. The algorithms contain code that's used to form predictions for the models. The data the algorithms are trained on often determines the types of outputs the models create. The data acts as a source of information, or inputs, for the algorithm to learn from, so the models can create understandable and relevant outputs.

Put another way, an algorithm is a set of procedures that describes how to do something, and a machine learning model is a mathematical representation of a real-world problem trained on machine learning algorithms, said Anantha Sekar, UK Geo presales at Tata Consultancy Services. "So, the machine learning model is a specific instance," he said, "while machine learning algorithms are a suite of procedures on how to train machine learning models."

The algorithm shapes and influences what the model does. The model considers the what of the problem, while the algorithm provides the how for getting the model to perform as desired. Data is the third relevant entity because the algorithm uses the training data to train the machine learning model. In practice, therefore, a machine learning outcome depends on the model, the algorithms and the training data.

Additional popular types of machine learning algorithms

There are hundreds of types of machine learning algorithms, making it difficult to select the best approach for a given problem. Furthermore, one algorithm can sometimes be used to solve different types of problems such as classification and regression.

"Algorithms are the underlying blueprints for constructing machine learning models," Guarrera said. These algorithms define the rules and techniques used to learn from the data. They contain not only the logic for pre-processing and preparing data, but also the trained and learned patterns that can be used to make predictions and decisions based on new data.

As data scientists navigate the machine learning algorithm landscape to determine the most important areas to focus on, it's important to consider metrics that represent utility, breadth of applicability, efficiency and reliability, advised Michael Shehab, principal and labs technology and innovation leader at PwC. He also emphasized an algorithm's ability to support a wide breadth of problems instead of just solving a single task. Some algorithms are more sample efficient and require less training data to arrive at a well-performing model, while others are more compute efficient at training and inference time and don't require the compute resources needed to operate them.

"There is no singular best machine learning algorithm," Shehab said. "The right option for any company is one that has been carefully selected through rigid experimentation and evaluation to best meet the criteria defined by the problem."

Some of the more popular algorithms and the models they work with include the following:

- Artificial neural networks train a network of interconnected neurons, each of which runs a particular inference algorithm that translates inputs into outputs fed to nodes in subsequent layers of the network. Models: unsupervised, semi-supervised and reinforcement.

- Decision trees evaluate a data point through a set of tests on a variable to arrive at a result. They're commonly used for classification and regression. Model: supervised.

- K-means clustering automates the process of finding groups in a data set in which the number of groups is represented by the variable K. Once these groups are identified, it assigns each data point to one of these groups. Model: unsupervised.

- Linear regression finds a relationship between continuous variables. Model: supervised.

- Logistic regression estimates the probability of a data point being in one category by identifying the best formula for splitting events into two categories. It's commonly used for classification. Model: supervised.

- Naive Bayes uses Bayes' theorem to classify categories based on statistical probabilities showing the relationship of patterns between variables in the data set. Model: supervised.

- Nearest neighbors algorithms look at multiple data points around a given data point to determine its category. Model: supervised.

- Random forests organizes an ensemble of separate algorithms to generate a decision tree that can be applied to classification problems. Model: supervised.

- Support vector machine uses labeled data to train a model that assigns new data points to various categories. Model: supervised.

Best practices for training machine learning models

Data scientists will each develop their own approach to training machine learning models. Training generally starts with preparing the data, identifying the use case, selecting training algorithms and analyzing the results. Following is a set of best practices developed by Shehab for PwC:

- Start simple. Model training should begin with the simplest approach. Complexity can then be added in the form of model features, feature sophistication and advanced learning algorithms. The simpler model serves as a basis for determining if the performance obtained through the added complexity will be worth the additional investment in time and technical costs.

- Create a consistent model development process. Given its highly iterative nature, a consistent development process should be supported with tools that provide comprehensive experiment tracking so data scientists can more readily pinpoint where their models can be improved.

- Identify the right problem to solve. Look for improperly defined objectives, wrong areas of focus and unrealistic expectations, all of which are often responsible for a model's poor performance or failure to produce tangible value. Building a model requires solid grounding to properly assess its development.

- Understand the historical data. The model is only as good as the data it will be trained on, so start with a firm understanding of how that data behaves, the overall quality and completeness of the data, important trends or elements of the data set related to the task at hand and any biases that may be present.

- Ensure accuracy. To avoid introducing bias, providing the model with inappropriate feedback or reinforcing the wrong behavior, carefully set measurable benchmarks for model performance. A machine learning algorithm learns through feedback from an objective or outcome set in the training data. If the calculations that generate the feedback aren't carefully defined and aligned to the expected values, the result could be a poor or non-functioning model.

- Focus on explainability. Data scientists who focus on why a model performs the way it does will produce better models. This approach requires more comprehensive model validation and testing. Explainability also provides insights into a model's underperformance, hypotheses of how to enhance performance and a global view of how a model functions to help develop trust among consumers.

- Continue training. Model training is an ongoing process over the life of the model, including the production stage, so it can be continuously improved.

Is there a best machine learning model?

In general, there is no one best machine learning model. "Different models work best for each problem or use case," Sekar said. Insights derived from experimenting with the data, he added, may lead to a different model. The patterns of data can also change over time. A model that works well in development might have to be replaced with a different model.

A specific model can be regarded as the best only for a specific use case or data set at a certain point in time, Sekar said. The use case can add more nuance. Some uses, for example, may require high accuracy while others demand higher confidence. It's also important to consider environmental constraints in model deployment, such as memory, power and performance requirements. Other use cases may have explainability requirements that could drive decisions toward a different type of model.

Data scientists also need to consider the operational aspects of models after deployment when prioritizing one type of model over another. These considerations may include how the raw data is transformed for processing, fine-tuning processes, prompt engineering and the need to mitigate AI hallucinations. "Choosing the best model for a given situation," Sekar advised, "is a complex task with many business and technical aspects to be considered."

George Lawton is a journalist based in London. Over the last 30 years, he has written more than 3,000 stories about computers, communications, knowledge management, business, health and other areas that interest him.